Learning 3D Point Cloud Descriptors for Robust Localization

Autonomous robots require robust methods to consistently localize in a map. Previous work has shown the benefits of using structure-based localization but relied on the use of expensive LIDARs. This work explores the use of stereo cameras to generate 3D data instead. To augment the quality of the noisy point clouds, the use of color and semantic information for both segmentation and description of the points clouds is evaluated. Experiments show a significant improvement of the descriptor performance. Overall, up to 82% of the frames are localized with 90% of the localization errors below 3m and 60% below 1m. The end-to-end localization performance is shown to approach that of lidar but suffers from suboptimal tracking which warrants further investigation.

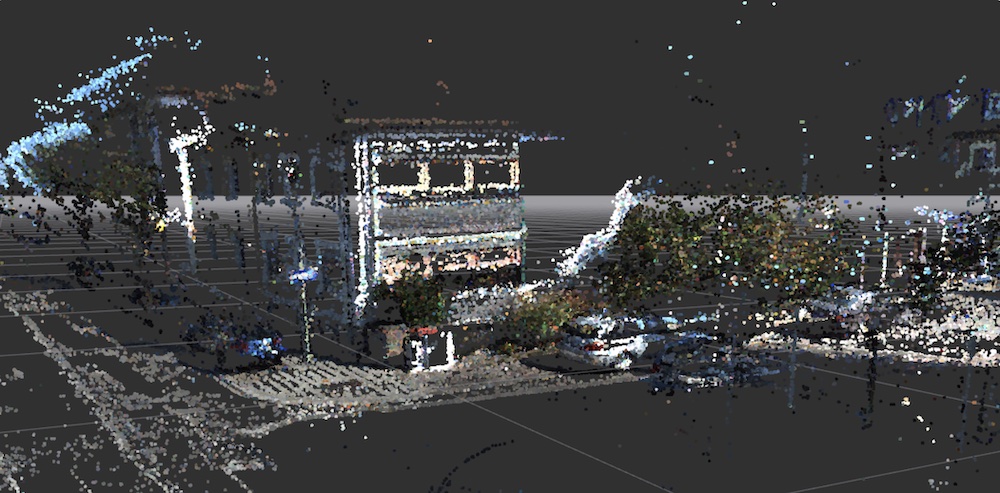

I explored a variety of methods to improve the baseline of 3D descriptors using deep neural networks such as 3D convolutional neural nets, graph convolutional neural nets to deal with the inherent problem of permutation invariance when dealing with 3D point clouds (effectively sets). The final system used 2D semantic segmentation on RGB images, stereo matching to generate 3D point clouds and XYZ. The benefit of this method is the fact that it requires no expensive Lidar but enables localization in GPS denied environments where simple visual localization may fail (greatly different view point - talk about reconstruction that implicitly happens in learning descriptors via Autoencoder).